Making LLMs Reliable: Building an LLM-powered Web App to Generate Gift Ideas

It's the holidays for some of us at .txt, and that means struggling with what gifts to buy for loved ones.

Purchasing gifts can be difficult. I am not particularly good at noticing what things people might enjoy throughout the year, and the holidays are something of a scramble for me to find gift ideas for the people in my life.

So, I wrote a tool to help me find a few gift ideas! The tool is called .gifter. It’s a simple web application or command line tool that demonstrates how we can make language models reliable and predictable, powered by Outlines.

Figure 1: Cameron with his gift bag.

What does .gifter do?

.gifter works by requesting a description of the individual you'd like to get ideas for. The tool passes the description to a language model and provides structured gift recommendations that can be displayed in a simple web application. You can optionally enable web search to learn more about each idea using Exa.

Figure 2: The .gifter home page.

At its core, .gifter is a proof-of-concept that has implications for developing compound AI systems. It demonstrates how we can transform flexible user input into precise, internally consistent data that integrates seamlessly with the rest of your system.

The key to making this work is structured generation.

What is structured generation?

When working with language models, getting consistent output can be challenging. Structured generation solves this by constraining a model to output in specific formats.

For .gifter, each gift recommendation follows a simple structure:

- Name and description of the gift

- Reasoning for why it's a fit for the person

- A "personalized" card message (though you should probably personalize it yourself)

- Gift category (e.g., "book", "activity", "clothing")

We also require that the model reliably generate a list of gift recommendations.

Outlines, our open-source Python library, ensures that the model will always output the gift formats we specify. Outlines makes language models predictable tools that fit naturally into your application. Instead of wrestling with inconsistent outputs, you can focus on building features your users need.

If you'd like to learn more about Outlines, please see our GitHub, Discord, or documentation.

Structured generation in practice

In this walkthrough of .gifter, you'll see:

- How to turn a simple description into personalized gift ideas

- How structured outputs make building UIs straightforward

- How we can extend the system with reliable web search integration

Let's take a look at the implementation.

Building with Outlines

Outlines transforms language models into predictable tools that fit naturally into your application. Rather than fighting with model outputs, developers can focus on building features their users need.

In the next section, you'll see:

- How I describe my brother to get personalized gift ideas

- How to include specific fields like descriptions and card messages in gift ideas

- How we can reliably retrieve search results to learn more about the gift ideas

Let me show you how this works by walking through .gifter's implementation.

How .gifter works

Here’s an example of something I’d give to .gifter:

I would like to give a gift to my brother. He likes depressing books written by Russian novelists, running, climbing things marketed as unclimbable, journaling, cycling, and whitewater kayaking.

You can provide this description in a CLI (omitted in this post, please see the demo here), or you can use a web front end provided by Flask, a Python web framework.

The user interface

In the web front end, users see something like this:

Figure 3: The .gifter user interface.

The form has three key elements:

- A simple text box — the standard language model interface most users are familiar with

- Minimum and maximum - specifying how many ideas the model should generate

- A check box to enable web search — enabling additional research on each gift idea

The text box is straightforward - language models excel at processing free-form text input. But the minimum and maximum fields are more interesting.

Why constrain output length?

Language models are like toddlers. You can tell them to constrain some output in the prompt, but they might not listen:

Please produce between one and five gift ideas. Do not produce more than five ideas, and do not produce less than one gift idea. If you produce any number of ideas outside this range, I will be fired and my house will be repossessed.

Even high-quality models can ignore length constraints in prompts. No matter how dramatically you phrase your instructions, models may still generate too many or too few outputs. Smaller, less resource intensive models are even more likely to deviate, forcing developers to build monitoring systems to validate outputs.

Structured generation eliminates this problem entirely. With Outlines, it becomes impossible for the model to generate more or fewer gift ideas than specified. Users and developers can trust that the output will always match their requirements.

Running .gifter

When you press “Generate Gift Ideas”, our application will pass the user specifications to the model, and produce a report of gifts it thinks the person will like.

Figure 4: The .gifter web interface loading screen.

The reasoning step

The first model-generated content the user will see is the model’s reasoning step. When I’m working with structured generation tasks, I will typically include a reasoning step because it can often improve model performance. It’s also a useful barometer to see what the model observes, which can assist with debugging input data or prompting issues.

Here’s what that reasoning step looks like for my brother’s description:

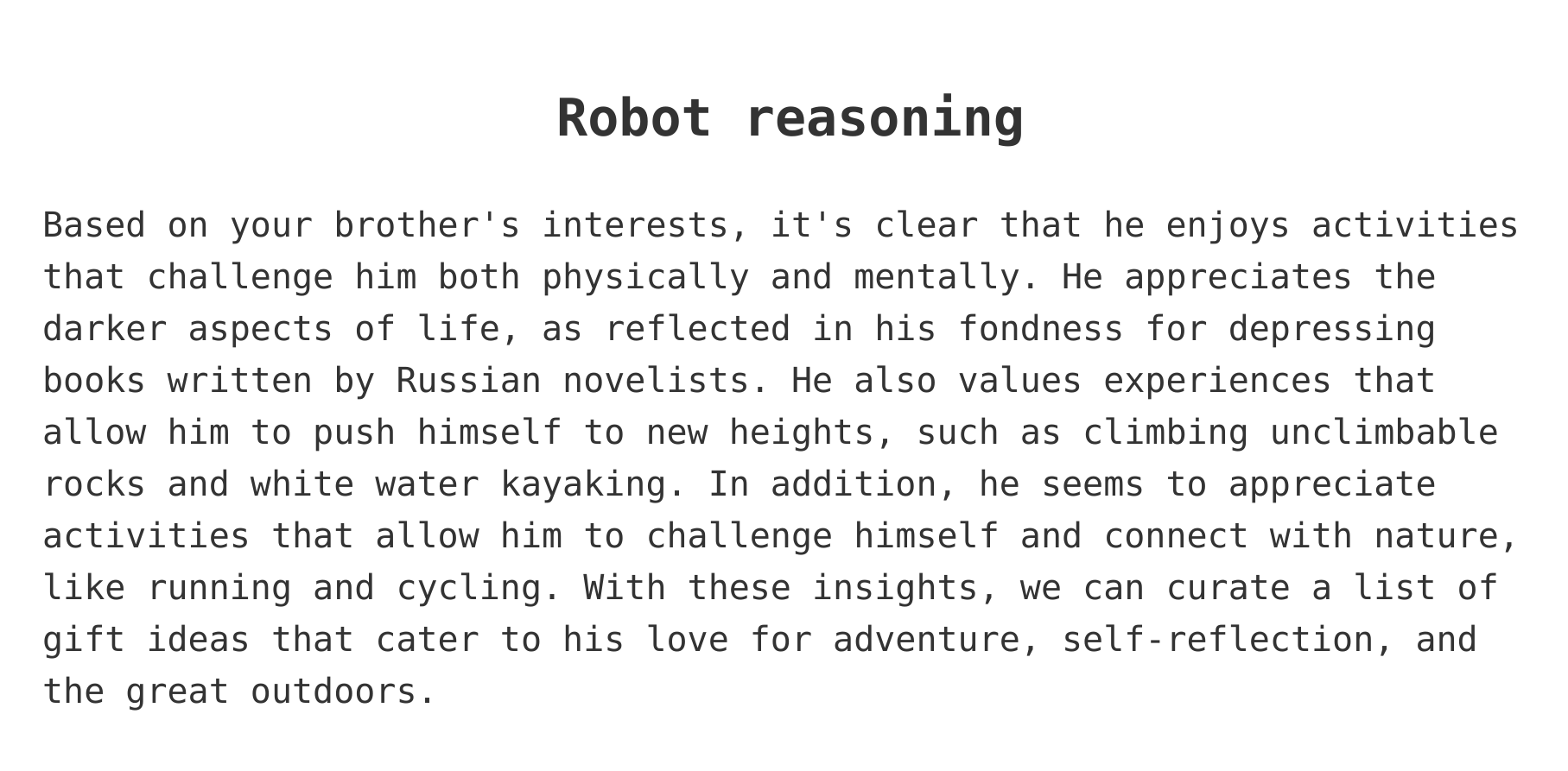

Figure 5: The model reasoning through gifts the person might like.

That’s a pretty solid description of my brother. The model has thought through my brother’s characteristics and has planned for a few broad gift categories (adventure, self-reflection, and the outdoors).

Gift suggestions

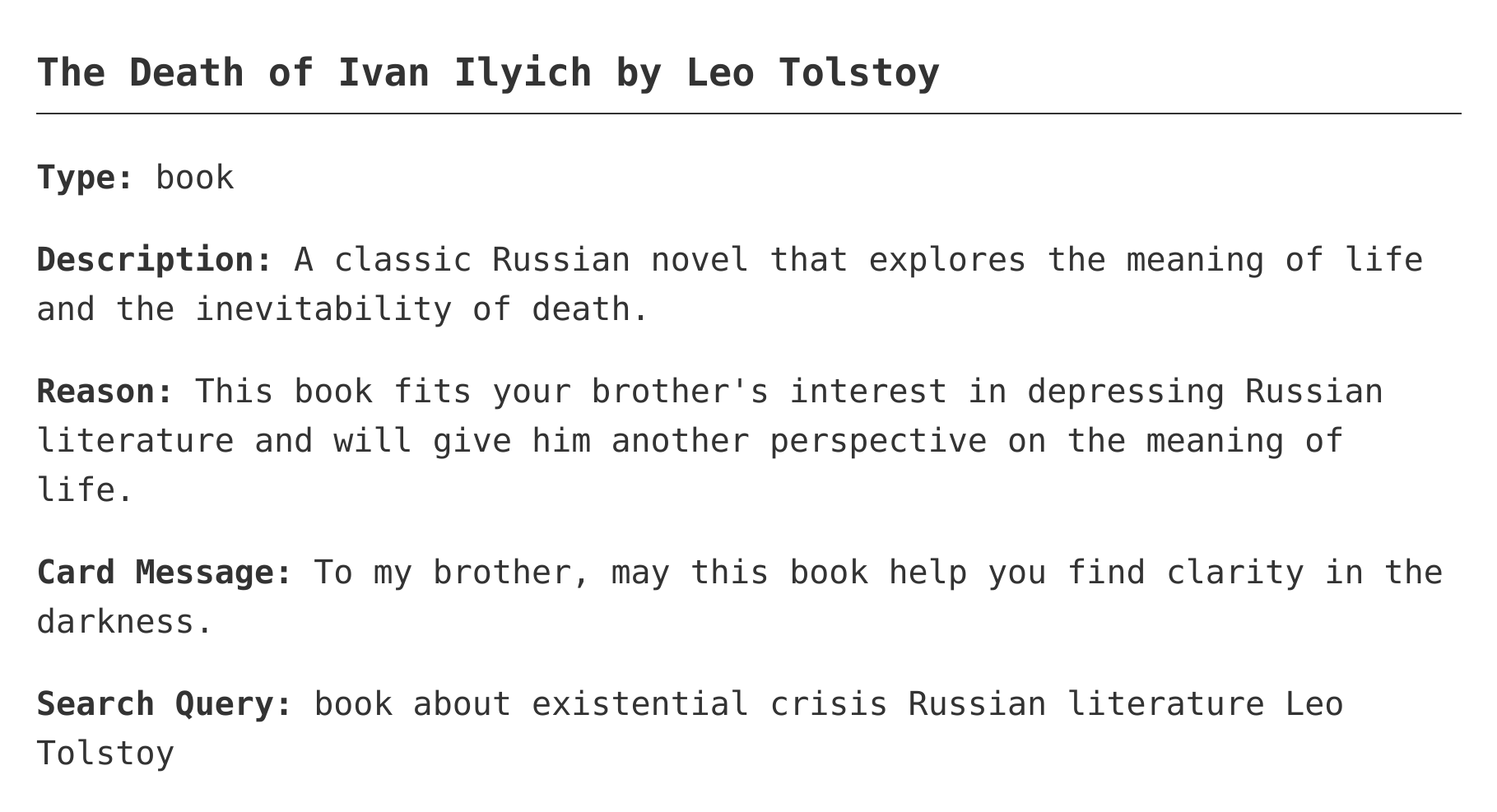

If you scroll down, you will see a list of gift ideas. Here’s the first one:

Figure 6: The first gift recommendation.

Perfect. He loves depressing Russian novels! Is there a better way to celebrate the holidays than by grinding through a book about an existential crisis? I think not.

Each gift idea includes several required fields:

- Description

- Reason for the suggestion

- A message for the gift card, in case you don’t even know what to say to your loved one

- A search query for finding the item

If you are used to working with language models, you’ll know that they’ll sometimes add the above structure with prompting. In this case, we have required the model to output each of these fields.

The guaranteed structure is what enables us to build reliable user interfaces. Let me show you how that works under the hood.

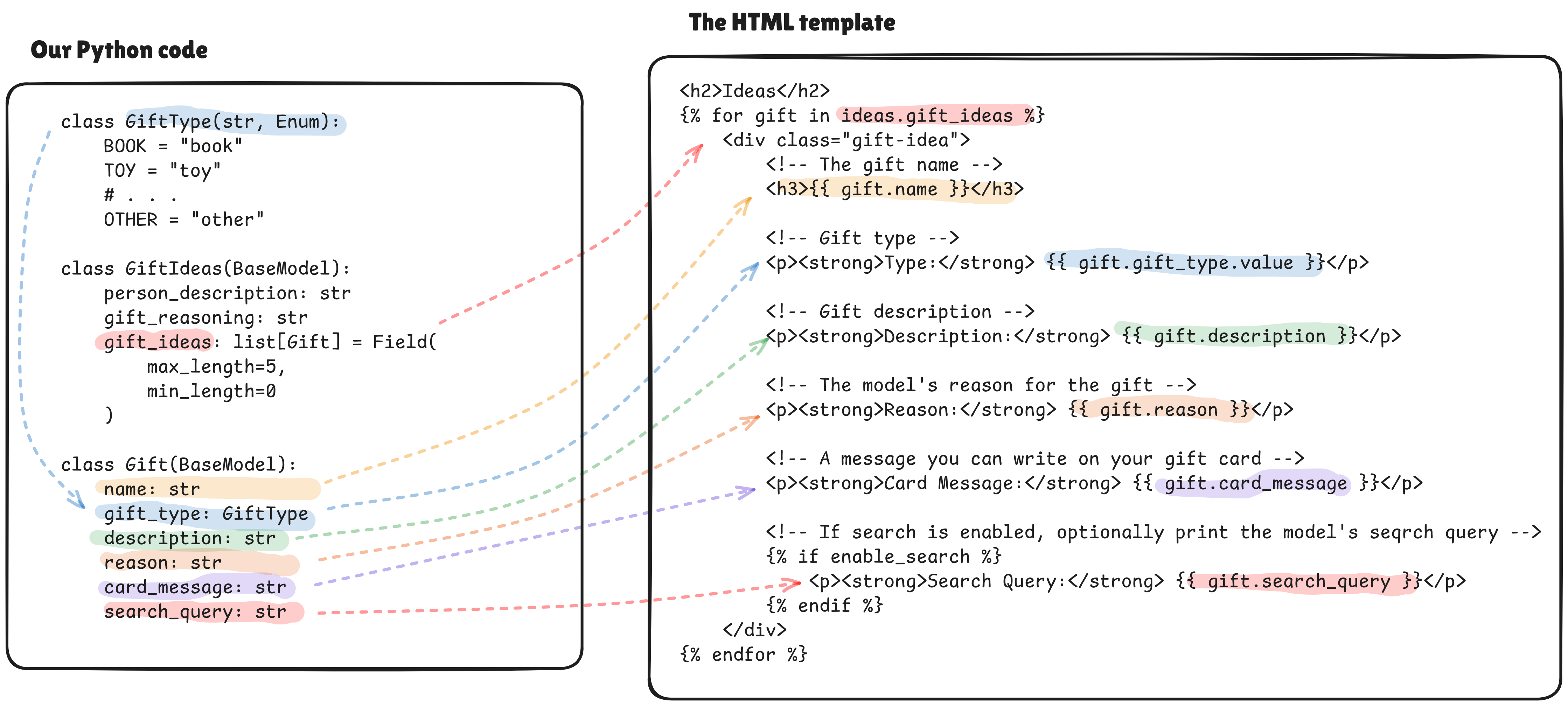

Connecting the back end to the front end

How does .gifter take the LLM-generated gift ideas and render them on a web page?

In Outlines, we typically specify the structure we want to work with as one or Pydantic classes. The language model will then “fill out” these classes when prompted. You can do all the standard things you would elsewhere, such as inheritance, class methods, and nested classes.

Our UI uses the Flask web framework and Jinja templates. We simply pass our LLM-generated objects to a pre-written Jinja template that renders to HTML.

Take a look at this figure, which shows how our Python code maps to the Jinja template we specify for the gift result page:

Figure 7: The .gifter web interface loading screen.

After the template renders, we can send this to the client. They’ll see the list of gift ideas pictured in the previous section.

This template only works because I can trust that every field will exist. Outlines guarantees that every gift idea will have a name, type, description, and other required fields. If you do not use some form of structured generation, there is a non-negligible change that fields will be missing, misspelled, or incorrectly typed.

Let’s try adding an addition feature — search functionality to give the user a little more information about the gifts.

Search integration

Structured Generation also makes it trivial to extend the behavior of our applications.

In this case, I ask the model to produce a search query for each gift recommendation (you can see this in the figure above). Using Exa’s simple search API, we can quickly go from the model-provided query to search results.

Let’s take a look at the Python code we use to define a `Gift`the model generates. Since it’s just a standard Python class, we can add a method to query using the model-generated search query:

from exa import Exa import os from pydantic import BaseModel exa_client = Exa(api_key=os.getenv("EXA_API_KEY")) def search(gift): """Search for gift-related content using Exa API""" # Note -- API error handling # has been omitted for brevity. result = exa_client.search_and_contents( gift.search_query, type="neural", use_autoprompt=True, num_results=3, highlights=True ) return result # Search for information about each gift idea search_results = [ search(gift) for gift in ideas.gift_ideas ]

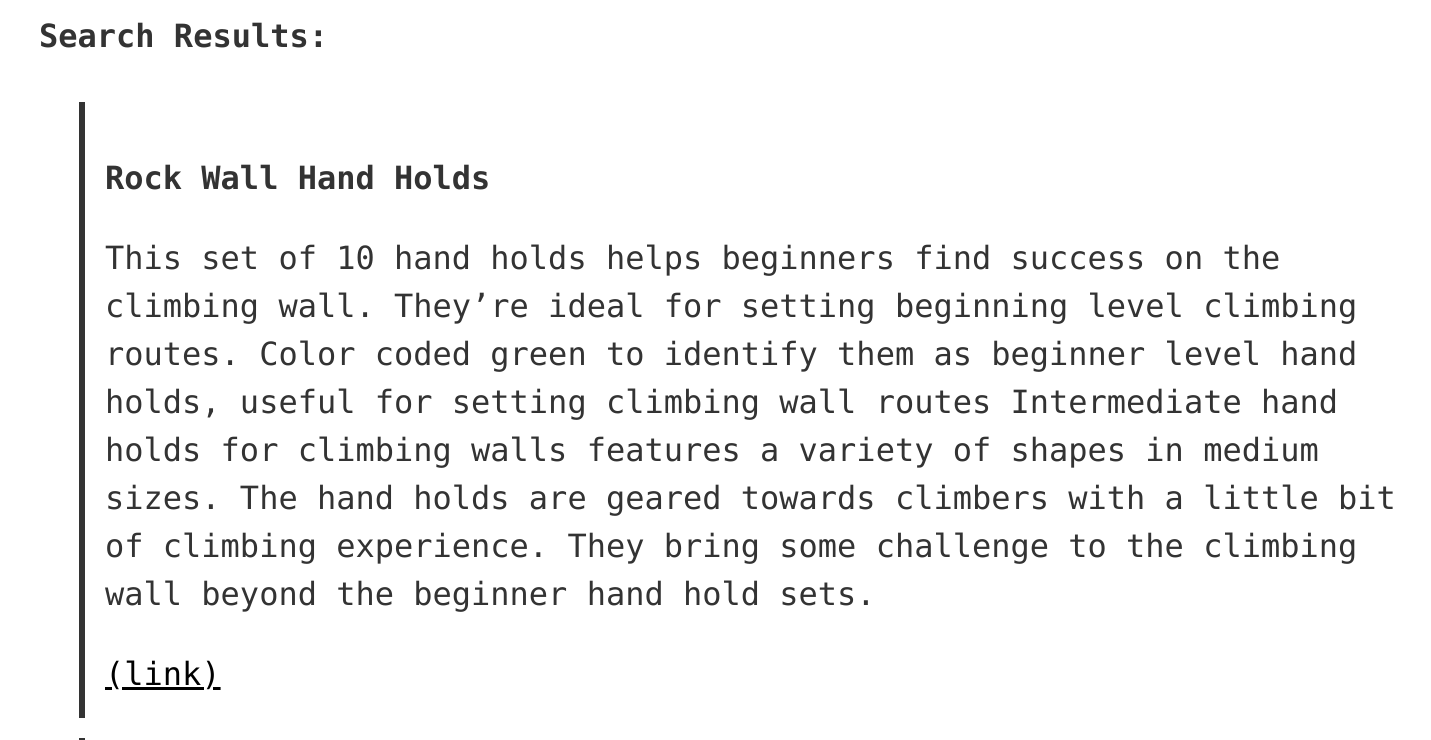

After this, we can render the search results directly into our web application (through some omitted code, which you can see here):

Figure 8: The .gifter web interface showing the search results.

I was able to insert a search result title (”Rock Wall Hand Holds”), a brief highlight provided by Exa, and a link to the web page. Easy peasy.

The takeaway I want you to have from adding search results is that it is simple to combine (a) well structured language model output with (b) standard programming tools.

Want a deeper dive into the technical details? Check out our YouTube video, or look at the code for .gifter on GitHub.

Conclusion

Building applications with language models often means spending more time managing their quirks than building features users want. What makes .gifter interesting isn't the language model — in fact, the model is intentionally the most boring part. By using structured generation, I know exactly what output I'll get reliably.

Reliable language output lets me focus on what actually matters:

- Building useful features

- Creating intuitive interfaces

- Developing robust infrastructure

But more importantly, .gifter was an attempt at improving my gift giving skills. It attempted to address a real need that I have. I was able to build a simple, predictable web application that uses language models as tools to accomplish a need.

I think in this case though, I'll remember that sometimes the best solutions aren't technical at all. Spending time with people you love is often the best gift you can give — though having a few backup ideas from the model can’t hurt.

Happy holidays everyone!