New rules for AI

There are hidden rules in every system.

Rules to govern the sentences we write, the software we code, and the music we play. These rules aren’t created to subjugate, but to integrate. Without them, the different parts of a system can’t work together to form something greater.

Some believe that these rules reflect our unique way of thinking and conceiving the world. But for computers alike, without structure there is chaos. Keywords and variable names, out of order, don’t make a program. You need a grammar — a syntax — to make the system work.

For almost a century, we’ve been teaching our machines the grammars of our world to help us work, play, and create better. But as of yet, our AIs remain stubbornly resistant to learning them. While they can string together sentences to emulate human syntax, they’ve yet to learn the syntax of computer, and of the applications that run our world.

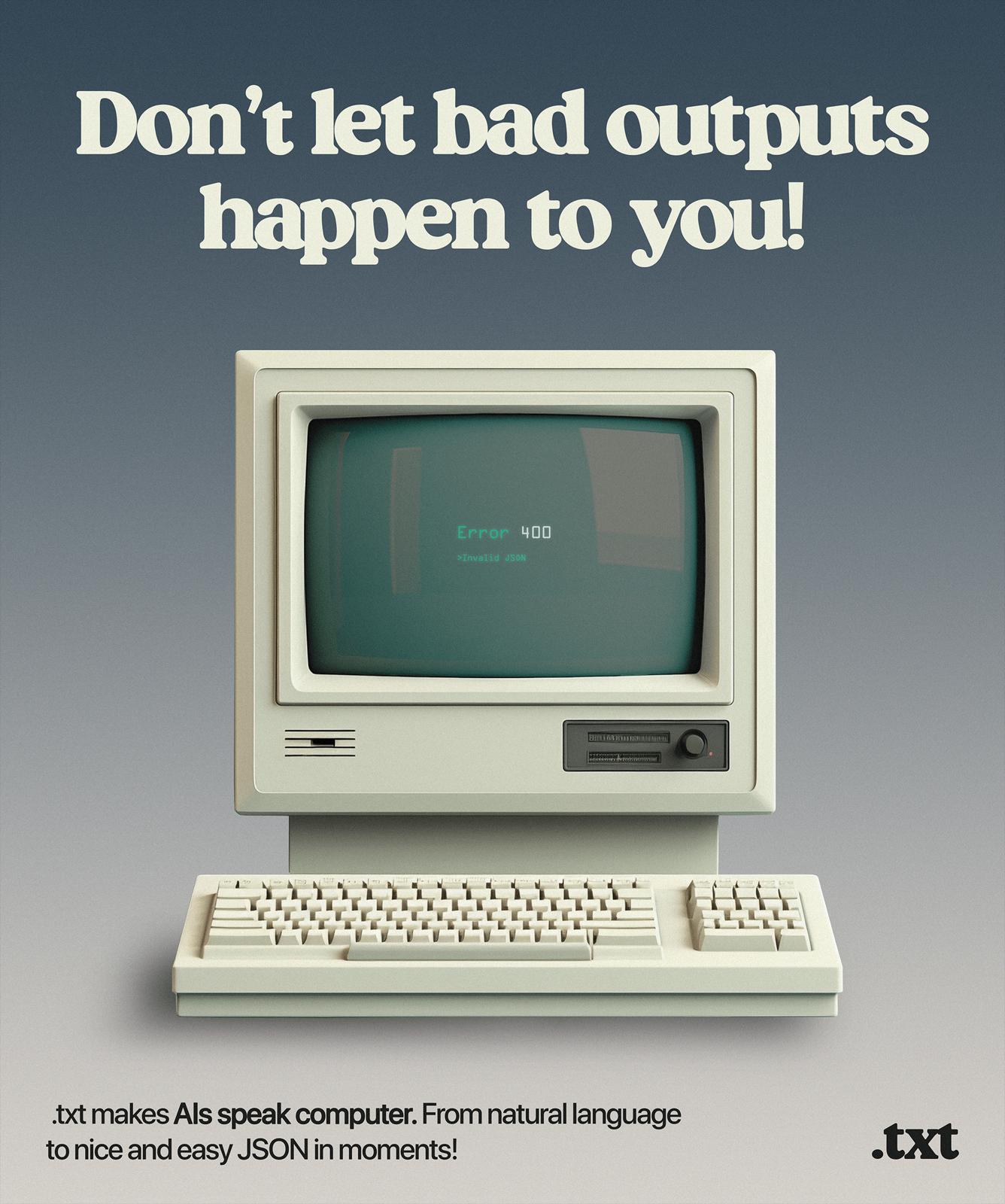

Without more predictable outputs, LLMs simply can’t be integrated into our systems and businesses. It’s their fundamental flaw.

We believe that this demands a new programming paradigm, which allows LLMs to respond in a way that software understands. Until then, our generations are fun. But they are not transformative.

Until then, it’s just the beginning for how AI will change our world.

Our mission is to help AI speak the language of every application.

This is why we built .txt. A tool to make LLMs reliable enough for the world to build on. A spellcheck for programming the future. And more broadly, an ecosystem where developers can design, execute, deploy, and evaluate LLM applications.

But how? LLMs are probabilistic by nature, so we believe the only solution is implementing guard rails at the outset based on your unique criteria… taking all potential probabilities and setting all the useless ones to zero. In other words, .txt stops LLMs from making errors before they even make them.

Fewer tokens. Less money. More efficiency. We are not the average AI engineers.

We cut our teeth in applied statistical modeling — a job where you make bespoke models specially designed to give only correct answers. To us, applying this field to LLMs is the logical next step. The missing layer that will make AI truly useful, cutting out the noise and uncertainty endemic to LLMs.

It’s the thing that makes LLMs act like computers. Finally. If your goal is to sound conversational and believable enough, the everyday language works just fine. But to be good enough to compute with, the level of clarity must be far higher. In this sense, LLMs are, ironically, a regression!

So, let’s create the new rules for AI and invent a new way to program with them… together. Join the discussion on Discord. Or just tweet at us. We can’t wait to see what you create!